Is good, good enough? close

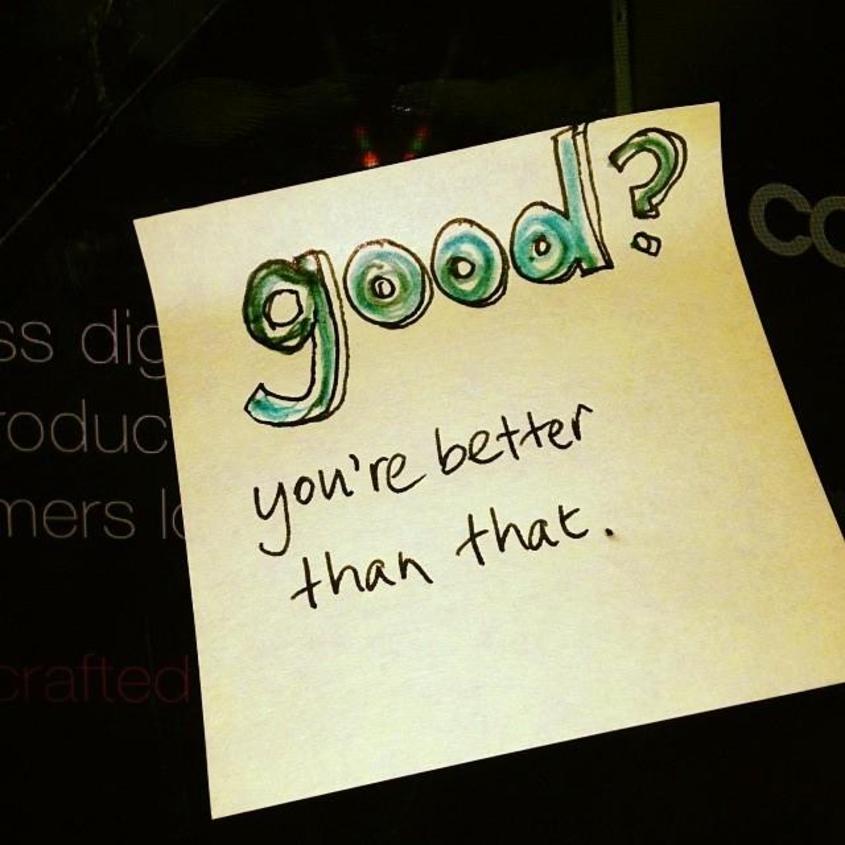

Is Good, good enough?

Anyone who has ever launched a marketing campaign or a new product or service will know about this. You work hard to get something out there, maybe it’s a test campaign, maybe it’s an MVP (Minimum Viable Product) or maybe a new feature. You set it free into the big bad world and ….. it does well! High fives in the office. OK it hasn’t set the world on fire and you feel that you were realistic in your expectations and it’s pretty much hit them. That’s good, right?

But deep down, you’d hoped it would be better than OK. Has it justified the original investment? Yes – just about. And over time it will pay back what was spent on it, but wouldn’t it have been nice to have really taken the world by storm? Wouldn’t it be amazing to tell your boss that you’ve delivered an unfeasibly large ROI? Wouldn’t it be great to have the kudos from your friends and peers for being the person that launched something that was an overnight success? Never mind – at least things are heading in the right direction.

What happens next? Well, you keep watching and waiting. You’ve got a few ideas that might improve things, maybe something that you’ve seen someone else doing, so you commit more budget to making those happen. And it’s OK to do that because let’s face it, it’s making money and that’s a good thing. Because good is good. It’s way better than bad. Isn’t it?

Actually. Good isn’t good enough. Not when you could be great.

But this is a daily reality and we’ve definitely been guilty of it ourselves. We do something and it does OK. Then something else shiny grabs our attention. The idea of finessing something that is already doing OK isn’t anywhere near as much fun as the next big thing. But in fact, finessing the product, making a number of small experiments to test whether what you’ve done is as good as it could be, is exactly what you should be doing next.

How do you turn good into great? There are two things to really consider:

- Time, money and resources

- Drivers of growth

Clearly, if we had all the time, money and resources in the world, we could just keep at it until we hit the right formula. The one thing that turned our mediocre offering into something so valuable to the end user that they paid for it in their millions rather than in their 10s or 100s. But, for most of us, our job is to get to where we need to by using as little of those things as possible.

Added to that is the notion that there is no point in improving something unless it impacts on something that will ultimately drive growth. How do we work that out? Well, in the case of our Twitter monitoring tool, Twilert, for example, there are four key drivers for growth:

- Number of free trialists

- Percentage conversion of free trialists to paid subscribers

- Subscription package value (we currently offer three)

- Lifetime value (or in other words, how long they subscribe for)

So anything we do in the future has to have a proven and direct improvement in one of more of these four drivers or else we’ve wasted our time (and money) and be deemed a failure. As an example, if we invest 2 weeks in improving the design and then another 4 weeks recoding the front-end to reflect that design and everyone tells us how great the new design is, but it has no impact whatsoever on the number of people taking out a free trial, then that experiment was a failure. We may as well not have bothered: apart from it made ourselves feel prouder to show people our product.

Anyone who has read The Lean Startup, will recognise this approach to be what Eric Ries describes as “tuning the engine”.

The fact that people said “loving your work” but we were unable to show any impact on customer behaviour is what Ries calls “Vanity Metrics”. So, where does this leave us?

It leaves us becoming much more focused both on understanding whether or not a change is going to be beneficial, but also how to find this out by committing the least expense in time, money and resource.

Take the simple example of Twilert above. Rather than redesign the whole service and then code those changes into the product, which might take 6 weeks in total, why not redesign one or two pages and then A/B test (split potential customers into two groups) and test one design against another on one part of the site? Maybe this could be done in a week rather than six. The outcome might show improvements to one or more of the core drivers for the customers who saw the new design, in which case we would feel confident to roll it out across the site. Alternatively, it might have no impact whatsoever, in which case we’ve saved ourselves 5 weeks that we could spend on something more useful.

Ries talks about testing hypotheses (or ideas) where these tests can be proven to show cause and effect. Take Twilert again: we have made two asumptions around doing a free trial:

- That people need to use the service to see if it’s right for them before they are prepared to pay for it.

- That if we ask people for their credit card details up-front (rather than when their free trial has ended), then we will get significantly less people starting the free trial.

The fact is, we don’t know if either of these things is true. And even if they are both true, given that what really interests us is the volume of people who are paying for the service, it might not even matter. If, for example, in a typical week we get 250 free trialists and 2 of them convert to paid, and then we test a ‘up front credit card requirement’ and the number of free trialists drops to 100, but 25 of them convert to paid, then it’s pretty clear what strategy we should take moving forward.

In the good ol’ days, we tended to work on gut: and a sense of what constituted good practice or what improvements the designers and developers really wanted to make and then hoping for the best. But increasingly its clear that, whilst experience and creativity are a necessity for identifying new ideas, without a robust way of validating whether each and every one of those ideas improves the numbers that matter to the business, we’re nothing more than highly educated guessers.